Make the eyes go away

I’ve written before about building agents and calling tools directly, but up till now I’ve largely avoided MCPs. Now, I’ve started a new job, and I needed to better understand MCP servers. I’ve read the spec, but I felt like there was a disconnect between what has actually become popular.

So, as I often approach these types of problems, I decided to build my own MCP server. Then I can point various off-the-shelf agents with MCP support at my server, and observe the interactions. Then by learning from those observations, I can better utilize the capabilities in my own agents.

Even though I’m building this as a learning tool, I wanted it to be genuinely useful. I’ve used the i3 window manager for some time now, and I’ve always known that it’s easily programmable, and that it has a first-class Go library. So my initial concept was to create an MCP server, which exposes the functionality of my running i3 window manager, through natural language.

Back to Claude Code?

I started where I usually start — with local models. I threw qwen3:30b at the problem with various larger context configurations, but the results were only rough sketches that wouldn’t compile. I did some minimal manual work to get it compiling and then on-boarded it to Claude Code. I’m back on the Claude Pro plan. I have a lot I need to get done, and right now it’s the best no bullshit toolset with affiliated models.

👤 User

Please familiarize yourself with this project, I’d like to discuss a new feature.

🤖 Claude

I’ll explore this project to understand its structure and purpose.

🤖 Claude

This is ithreemcp, an MCP (Model Context Protocol) server that bridges AI/LLM clients with the i3 tiling window manager.

Current functionality:

- GetTree - Returns the complete i3 window layout hierarchy

- GetWorkspaces - Returns details about all workspaces

Tech stack: Go 1.25.3, using the MCP Go SDK and go-i3 bindings

Architecture: Simple bridge pattern - MCP clients communicate via stdin/stdout, and the server translates requests to i3 IPC calls.

The codebase is compact (~112 lines in

main.go) and follows standard Go conventions.What new feature would you like to discuss?

👤 User

I’d like an end-user, with an agent configured to use this MCP server to be able to say something like, “move the firefox window to workspace 7”. Can you propose the tools you think we should add to this MCP server to best allow an agent to accomplish the task?

🤖 Claude

Great use case! Let me think through what an agent would need to accomplish “move the firefox window to workspace 7”.

And away we go…

Twenty-five commits later, split over two evenings, a github repo was born. ithreemcp

Using our MCP Server

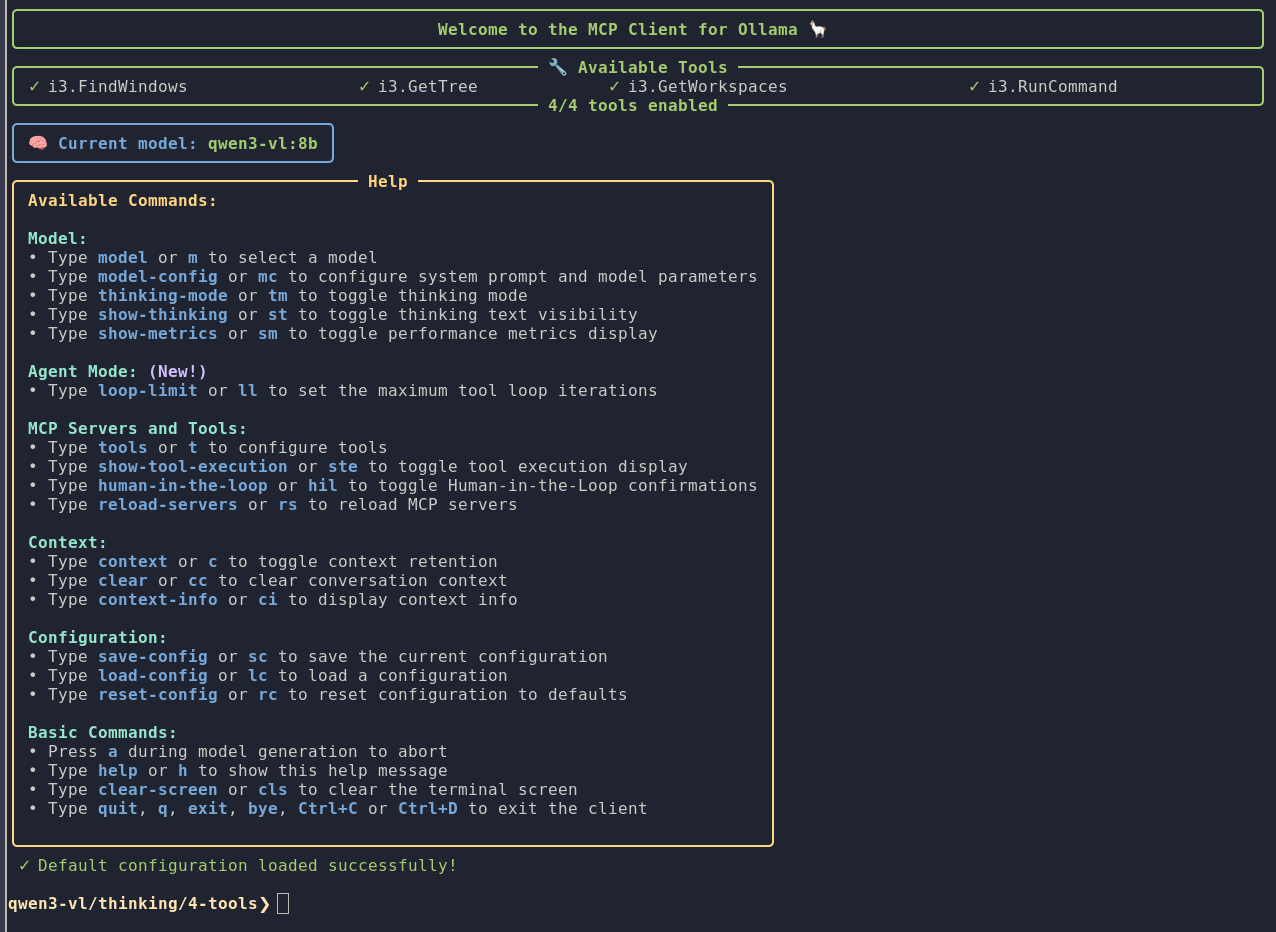

As I originally stated, my intent was to learn how clients interact with MCP servers. I wanted to start by seeing if any of the local models could make it work. But I wasn’t exactly sure which agent to use. Rather than try and force it onto one of these coding agents, I found MCP Client for Ollama. It automatically discovers your Ollama models, today I’m using qwen3-vl:8b, and can be pointed to ithreemcp by adjusting the sample configuration we provide:

uvx ollmcp --servers-json ithreemcp.json

I like to start by giving it something I don’t think it can handle. So, I launched xeyes:

And then I told the model:

make the eyes go away

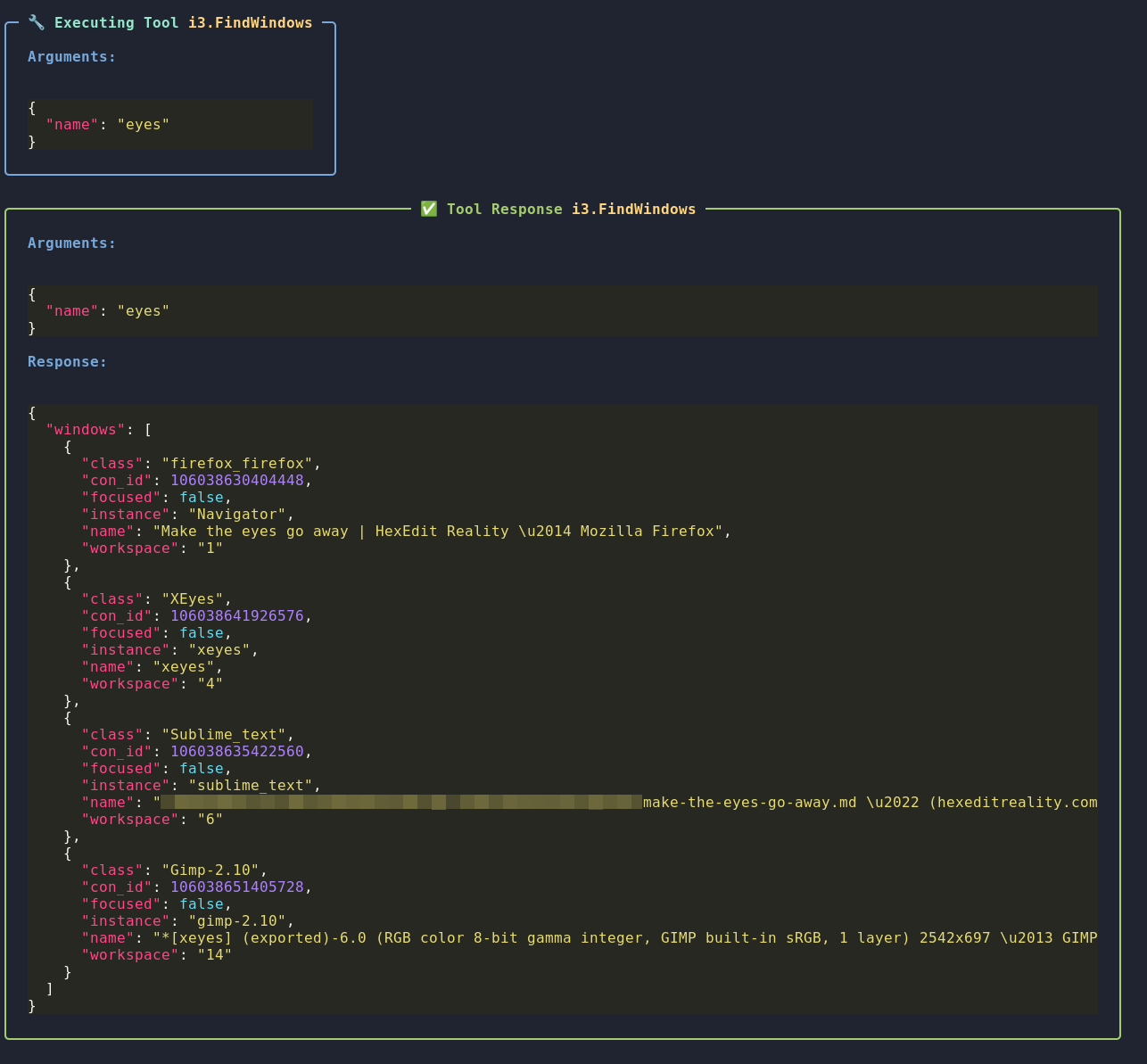

I hadn’t configured any sort of system prompt. Maybe it got a default one from somewhere about being a helpful agent, but it hasn’t been told the context surrounding its tools. From the tool descriptions, it could infer that it is interrogating/controlling an i3 window manager, but is never told that explicitly. And the RunCommand tool takes a syntax that is not provided, it must either remember that from its training or stumble onto something that works.

It figures out to search the windows for eyes. The search even returns 3 other matches, Sublime Text drafting this text, Firefox previewing the page, and Gimp editing the screenshots. But it correctly figures out those aren’t what I’m talking about.

It then proceeds to use the correct syntax to kill the right window, and explains that the eyes should be gone.

In fairness, sometimes it gets the i3 run command syntax wrong, and at least once it killed the xterm from which I launched xeyes (not like I had any other important tabs open or anything). But this was just some initial research. I think with a proper system prompt, and some i3 syntax examples it would perform well.

Reflections

As much as I love the local models, if you need to be productive writing code, I strongly suggest you find a frontier model where you’re comfortable with the privacy options and cost. I concede that for me it is a compromise for the moment. But, don’t misread what I wrote, local models are still a good fit for many tasks, particularly tasks that don’t require a large context window. As you saw in this post, for me the sweet-spot is using frontier models to hack on the models I can run at home.

Building ithreemcp was just the first step in my learning process. MCP has features I glossed over — Prompts, Resources, Sampling, Elicitation. I never really understood why Prompts existed in MCP. But watching qwen3-vl:8b stumble on i3 syntax occasionally, I get it now: an MCP server can ship prompts that teach clients how to use its tools effectively. I look forward to exploring these topics further.

Here is an assorted list of projects and technologies I’m currently exploring, but haven’t had time to write about.

- Beads - Steve Yegge’s issue tracker for your agent

- Claude Code Usage Monitor - How I track my Claude usage

- Langfuse - OTEL observability for your LLM

- Devstral Small 2 - Size matters, q4_K_M quantization is 15GB, which might leave just enough room for context on my 24GB card